Application Vulnerability Patterns

*This post originally appeared on the RiskSense blog prior to the acquisition in August 2021, when RiskSense became part of Ivanti.

Introduction

According to Imperva’s 2019 Cyberthreat Defense Report[1], a staggering 78% of organizations were affected by a successful cyberattack in the past year. Imperva also found that application development and testing is the biggest security challenge organizations struggle with. As modern applications grow more complex, organizations must invest more in reducing their attack surface and managing vulnerabilities. Unfortunately, there is no consistent nor clear way to measure a vulnerability’s risk without knowing the complete context in which it was found. So how do we prioritize vulnerabilities?

There are various risk scoring systems and taxonomies widely used to prioritize vulnerabilities, such as the OWASP Top 10, 2019 CWE Top 25, and Common Vulnerability Scoring System (CVSS). Many organizations use the OWASP Top 10 and CVSS scores to prioritize vulnerabilities, but these systems fail to account for contextual facts or demonstrate consistency in their scoring methodologies. Understanding vulnerability patterns and biases from application scanners, penetration testers, and bug bounties is essential in order for organizations to implement effective remediation strategies.

Background

The Common Weakness Enumeration (CWE) dictionary contains software weaknesses and vulnerabilities maintained by the MITRE Corporation. The 2019 CWE Top 25 lists the most widespread and critical weaknesses and ranks them using public data collected in the NVD, including CVSS scores.

The Common Attack Pattern Enumeration and Classification (CAPEC) provides a public dictionary of attack patterns also compiled by MITRE. CAPEC IDs are more specific than CWE classifications which can lessen the effect of encountering multiple CWE IDs with different severity levels for the same vulnerability.

The OWASP Top 10 is a well-respected and influential document for web application security that many organizations have adopted to minimize risk. Some CWE IDs map to an OWASP category, but not all will fall into one of the 10 categories.

Each of the aforementioned models bring its own perspectives and add to the understanding of application vulnerabilities. However, the models can naturally differ from one another and it is important for organizations to understand those differences and how they highlight various aspects of application security.

Comparing Taxonomies Based on Real-World Data

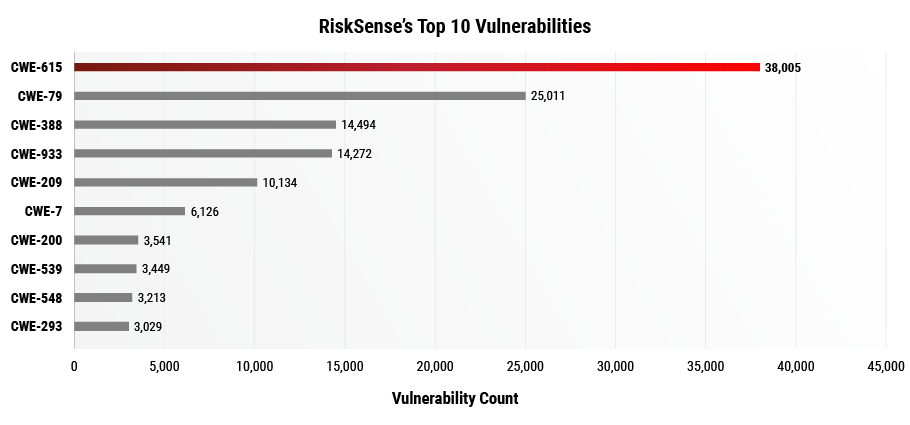

One of the difficulties in measuring risk is that application vulnerabilities are unique in nature and cannot always be classified into one of these taxonomies. To better understand each scoring model we analyzed 418,160 vulnerabilities from 1,787 different web applications and how the vulnerabilities were classified by each taxonomy. Application scanners provide classification mappings to vulnerabilities which are then represented in RiskSense’s platform. Figure 1 below shows the common weaknesses (CWEs) based on this analysis.

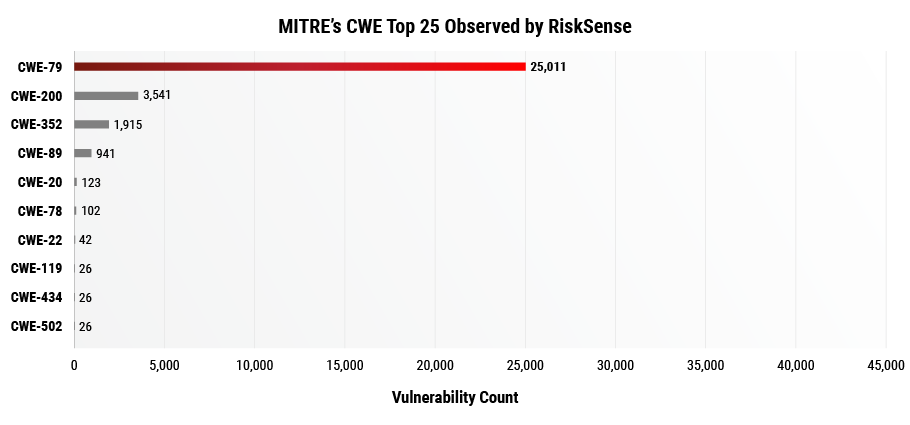

Information Exposure Through Comments (CWE 615) and Cross-Site Scripting (CWE 79) account for about 52% of vulnerabilities with a CWE Mapping. Notice CWE 615, 209, 200, 539, and 548 all center around Information Exposure which cover half of the list. These patterns align with MITRE’s CWE Top 25 Observed by RiskSense shown in Figure 2, which also lists Cross-Site Scripting and information exposure as top vulnerabilities.

Figure 2. For consistency with data comparison, only the 10 most frequently observed CWEs are shown in the graph above.

The coverage of CWE 79 XSS alone increases from 31% to 79% from Figure 1 to Figure 2 when filtered by 2019 CWE Top 25. In addition, CWE 200 Information Exposure’s presence among the vulnerabilities in the dataset decreases from 21% to 11%. Furthermore, CWE 89 SQL Injection, CWE 78 Command Injection, and CWE 352 CSRF are now among the top weaknesses after filtering the data by the 2019 CWE Top 25 list. However, the volume of vulnerabilities isn’t the whole story when prioritizing vulnerability patterns.

Some vulnerabilities can have a greater impact on an application and carry more risk to an organization due to the ease of exploit as compared to other vulnerabilities. When completing an assessment, RiskSense penetration testers take the time to comment on specific vulnerabilities which may have a larger impact with a successful exploitation or are easy to exploit. This added human cognition factor isn’t taken into account with the dataset of 418,160 vulnerabilities; therefore, the dataset was further concentrated to a subset of 60,454 pen tester validatedvulnerabilities. These vulnerabilities contain a manual finding report (MFR) that documents how the weakness can be exploited and is accompanied by screenshots and related evidence. This analysis brings a more offensive approach to the dataset that better reflects how an attacker might prioritize the vulnerabilities in a real-world attack. The pen tester validated dataset is collected from 751 web applications and again analyzed against the taxonomies and scoring systems previously mentioned above.

|

Figure 3. Top Vulnerabilities Across Taxonomies |

|||

|

No. |

CWE |

2019 CWE Top 25 |

OWASP Top 10 |

|

1 |

CWE 79: Improper Neutralization of Input During Web Page Generation (‘Cross-Site Scripting’) |

CWE 79: Improper Neutralization of Input During Web Page Generation (‘Cross-Site Scripting’) |

A7: Cross-Site Scripting (XSS) |

|

2 |

CWE 209: Information Exposure Through an Error Message |

CWE 89: Improper Neutralization of Special Elements used in an SQL Command (‘SQL Injection’) |

A6: Security Misconfiguration |

|

3 |

CWE 933: OWASP Top Ten 2013 Category A5 – Security Misconfiguration |

CWE 352: Cross-Site Request Forgery (CSRF) |

A3: Sensitive Data Exposure |

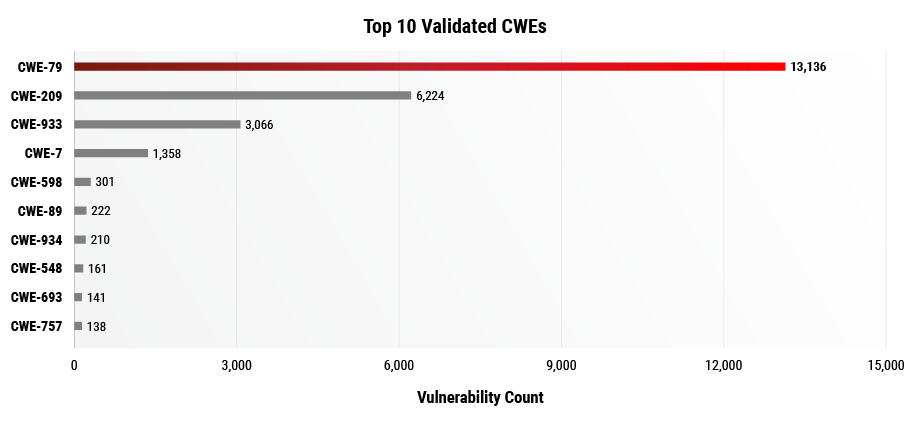

As seen in the table above, Cross-Site Scripting (XSS) is the most common vulnerability type across all systems whereas in Figure 1, Cross-Site Scripting was number 2 in the Top 10 Vulnerabilities figure. By filtering out those that were not pen tester validated, we get a better representation of the current vulnerability patterns. If we filter our data by CWE, we get the Top 10 Validated CWEs in Figure 4 below.

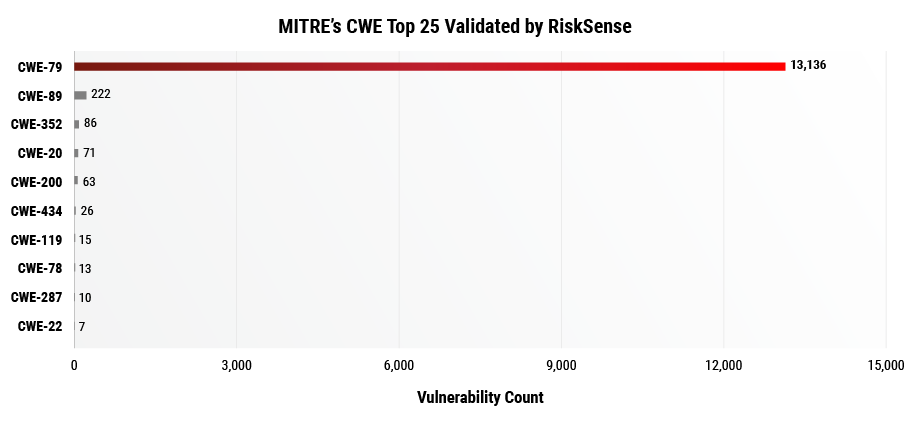

The figure above shows CWE 79 XSS accounting for over half of the vulnerabilities that have a CWE enumeration. Information Exposure is still prevalent among the Top 10 vulnerabilities in the refined dataset covering 28% of the Top 10 Validated CWEs. In both Figure 4 and FIgure 5, the presence of CWE 89 SQL Injection has increased, specifically jumping to the second most frequent Top 25 CWE validated by RiskSense.

Figure 5. For consistency with data comparison, only the 10 most frequently observed CWEs are shown in the graph above.

Filtered by MITRE CWE Top 25, CWE 352 remains the third most frequent vulnerability in both datasets. However, notice the skewed distribution with CWE 79 XSS accounting for 96% of the vulnerabilities that have a CWE mapping on the 2019 CWE Top 25 List. CWE 89 SQL Injection covers about 2% validated vulnerabilities and all other CWEs cover less than 100 vulnerabilities individually. Filtering by the OWASP Top 10 results in the most prevalent vulnerability also being Cross-Site Scripting as seen in the figure below.

Figure 6.

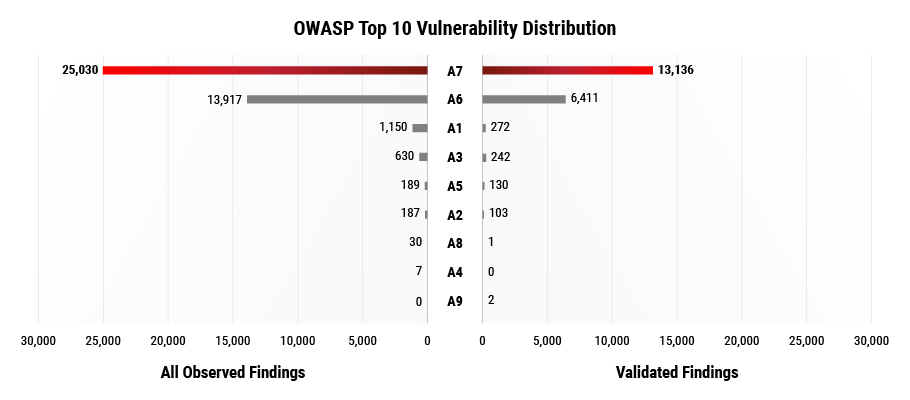

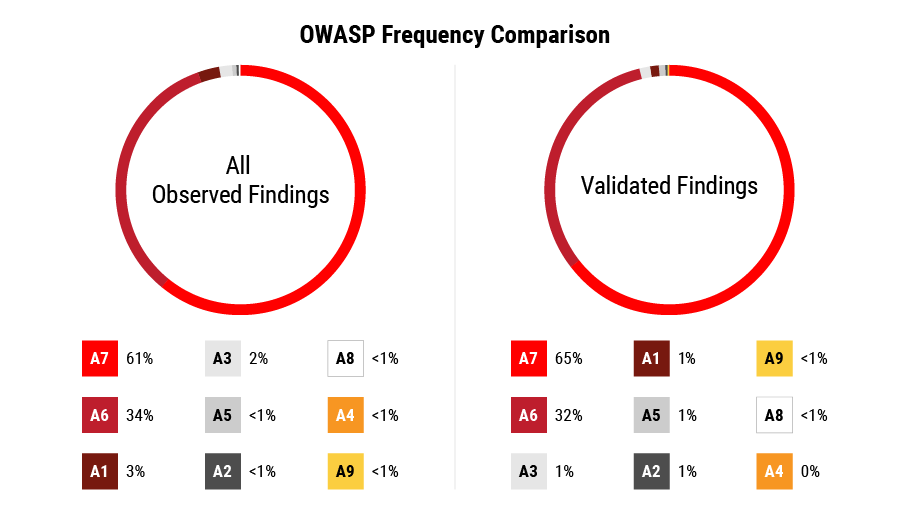

Cross-Site Scripting categorizes 25,030 application vulnerabilities, 13,136 of which are pen tester validated. OWASP category A7 (XSS) accounts for over half of vulnerabilities that have an OWASP mapping in both datasets above. The second most common OWASP category is A6 Security Misconfiguration covering about 34% of observed vulnerabilities and 32% of validated vulnerabilities in Figure 7 below.

Figure 7. Note that A10 isn’t present in the figure above because there were no vulnerabilities that mapped to A10 in either dataset.

The distribution varies little between the two datasets, except that A3 Sensitive Data Exposure overtakes A1 Injection from third place in the subset of 60,434 validated vulnerabilities. A3 covers 1.34% vulnerabilities and A1 covers 1.19% of pen tester validated vulnerabilities. The difference between A1 and A3 mappings is a total of 30 validated vulnerabilities of the 20,297 with an OWASP mapping. This analysis agrees with MITRE’s CWE Top 25 and the Top 10 CWE validated results. Based on our observations, Cross-Site scripting and Injection are present across all three mediums that present the most risk.

Comparing Pen Testers vs. Bug Bounty Programs

RiskSense penetration testers attack an organization’s system as an attacker would in order to test the organization’s security defenses. Their goal is to perform a full compromise and achieve lateral movement within a network, whereas bug bounty hunters simply compete for bug discovery and look for specific vulnerabilities.

Bug bounty programs can provide another real-world perspective in terms of how vulnerabilities are valued and prioritized. For example, HackerOne provides a vulnerability coordination and bug bounty platform that helps businesses reduce their risk by connecting them with penetration testers and cybersecurity researchers. Their Top 10 Most Impactful and Rewarded Vulnerability Types explores severity scores and bounty award levels for the most reported vulnerability types.

Both angles provide valuable insight, but the motivations of each group can lead to differing perspectives on vulnerability prioritization. Bug bounty programs give an outlook on what vulnerabilities software vendors are willing to pay the most for, while pen testers provide intelligence into which vulnerabilities are most valuable to an attacker. Figure 8 shows the Top 10 vulnerabilities observed by HackerOne.

Figure 8. Top 10 Vulnerabilities Observed by HackerOne

|

No. |

HackerOne Category |

CWE |

OWASP |

|

1 |

Cross Site Scripting (XSS) |

79 |

A7 |

|

2 |

Improper Authentication |

287 |

A2 |

|

3 |

Information Disclosure |

200 |

A5 |

|

4 |

Privilege Escalation |

233 |

A5 |

|

5 |

SQL Injection |

89 |

A1 |

|

6 |

Code Injection |

94 |

A1 |

|

7 |

Server-Side Request Forgery (SSRF) |

918 |

– |

|

8 |

Insecure Direct Object Reference (IDOR) |

– |

A5 |

|

9 |

Improper Access Control |

284 |

A5 |

|

10 |

Cross-Site Request Forgery (CSRF) |

352 |

– |

As seen in the table above, the number one most impactful and rewarded vulnerability on the list is Cross-Site Scripting. CWE 89 SQL Injection is fifth on HackerOne’s Top 10, sixth on RiskSense’s Top 10 Validated CWEs, and second on MITRE’s CWE Top 25 Validated by RiskSense. Further, CWE 352 Cross-Site Request Forgery comes in 10th on the HackerOne Top 10 and third on MITRE’s CWE Top 25 Validated by RiskSense. However, CWE 352 doesn’t make it onto RiskSense’s Top 10 Validated CWEs.

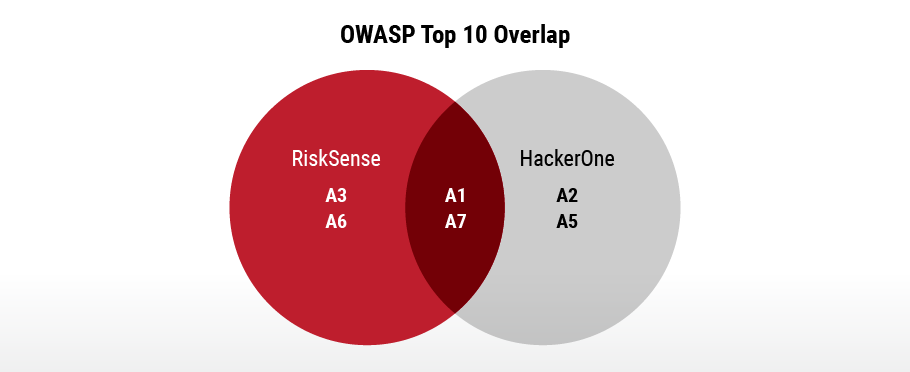

Of RiskSense’s Top 10 Validated CWEs, our dataset shows a 40% overlap with OWASP Top 10 categories which include A1 Injection, A3 Sensitive Data Exposure, A6 Security Misconfiguration, and A7 XSS. Furthermore, HackerOne reports a 40% similarity with OWASP Top 10 and their Top 10 Most Impactful and Rewarded Vulnerability Types. This overlap includes OWASP categories A1 Injection, A2 Broken Authentication, A5 Broken Access Control, and A7 XSS. Figure 9 below shows the overlap of the OWASP categories that were found in RiskSense’s Top 10 Validated CWEs and HackerOne’s Top 10.

Figure 9. Neither A4, A8, A9, or A10 map to a CWE found in either the HackerOne Top 10 or RiskSense’s Top 10 Validated CWEs.

OWASP A1 includes HackerOne 5 and 6, SQL Injection and Code Injection, which is the second most common OWASP category found in the RiskSense application vulnerability data. A7 which includes CWE 79 XSS is HackerOne and RiskSense’s top OWASP category.

Despite the different approaches in the scoring and categorical systems, RiskSense’s vulnerabilities don’t stray too far from HackerOne’s conclusions whose dataset was constructed from over 120,000 security weaknesses from 2015 to 2018. These weaknesses were reported by the hacker community and classifications were confirmed by HackerOne customers. This can introduce a data bias since classifications include weakness type, impact, and severity. Further, HackerOne customers may misclassify vulnerabilities or tend to classify certain types of weaknesses.

Conclusion

The prioritization of 2019 CWE Top 25, CWE and OWASP classifications vary in terminology, as well as what their factors are when attempting to measure risk. This discrepancy between popular taxonomies makes it difficult to properly quantify the amount of risk a vulnerability presents to an application and/or organization. While the OWASP Foundation urges the community that the type of vulnerability matters, the context the vulnerability lies in is equally significant.

The two vulnerability patterns consistent across RiskSense and HackerOne results include Cross-Site Scripting and Injection. RiskSense’s dataset represents what application scanners tend to find and rank as high risk, whereas HackerOne’s results follow the trends of what companies are paying the highest for in their bug bounty programs. When a penetration tester completes an assessment, they act as an attacker would to steal sensitive information or compromise an application. Figure 10 captures the Top Vulnerability Patterns based on frequency and risk according to penetration testers.

Figure 10. Vulnerability Patterns Observed by Penetration Testers

|

Most Common |

Most Risk |

|

|

The most common column on the left is comparable to the top vulnerabilities seen by RiskSense’s data and the most risk to an organization column on the right is comparable to those vulnerabilities that companies are willing to pay for. Further, Cross-Site Scripting is the most common vulnerability seen by penetration testers and SQL Injection is the second vulnerability that presents the most risk. It is important to evaluate vulnerability patterns from all three points of view in order to optimize prioritization.

Many of today’s common prioritization methodologies fail to take into account the human cognitive aspect that is critical for a remediation process to be effective. In order to improve risk-based vulnerability management policies, a comprehensive risk scoring system that fuses human cognition into its methodology is paramount. Doing so will put organizations in a position to align their security actions toward resolving what attackers hope to exploit, thus decreasing their attractiveness as a target.

[1] Imperva. 2019 Cyberthreat Defense Report. CyberEdge Group, LLC, 2019, 2019 Cyberthreat Defense Report, www.imperva.com/resources/reports/CyberEdge-2019-CDR-Report-v1.1.pdf.